Inference-Time Scaling for 3D Diffusion Models

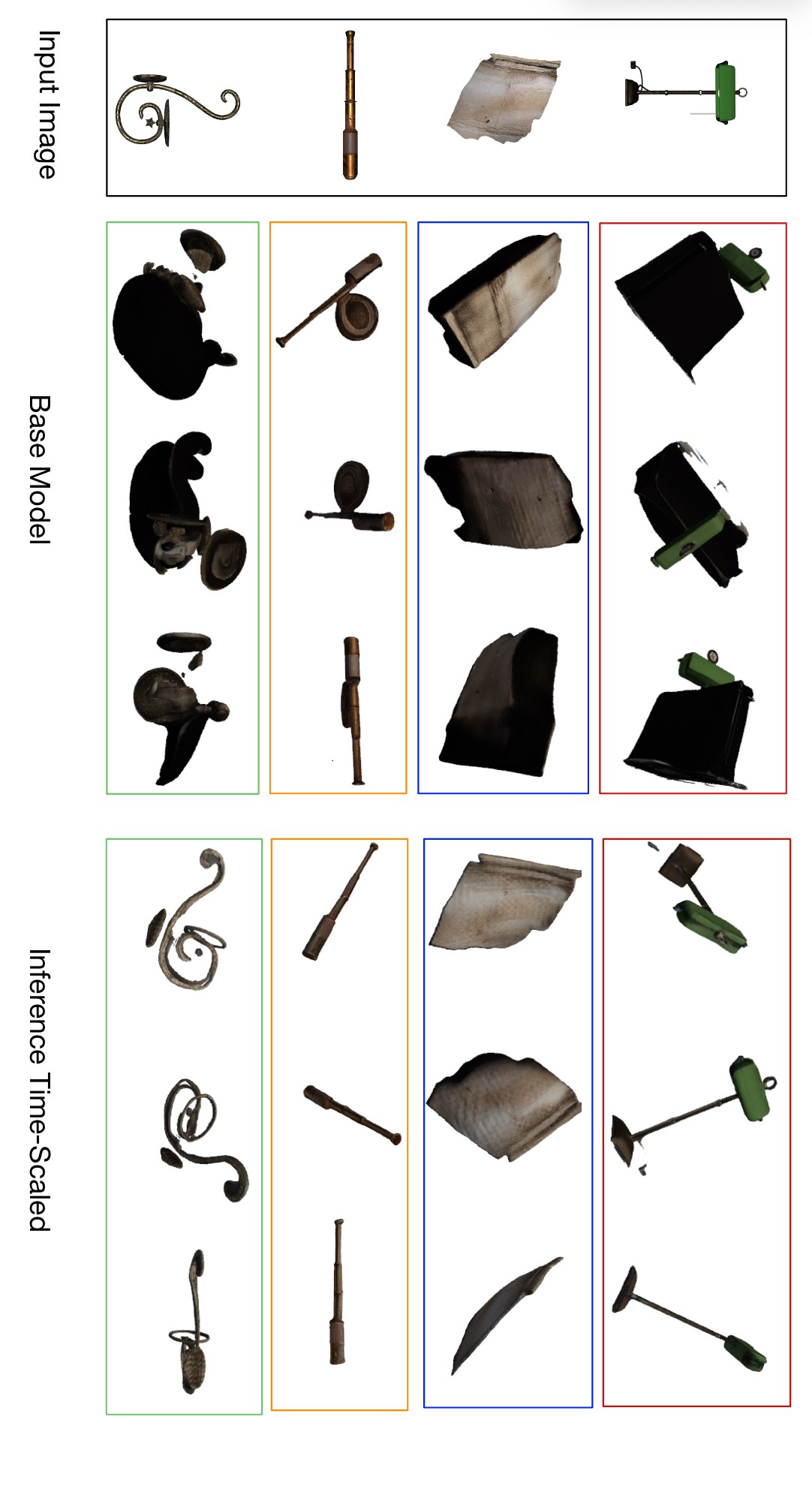

3D diffusion models have become a powerful tool for generating high-quality meshes from 2D images— but even state-of-the-art models like InstantMesh still suffer from artifacts, perspective errors, and poor detail in generated outputs. In this project, we developed an inference-time scaling algorithm that enhances the quality of 3D generations without retraining the model. Our method draws inspiration from similar techniques used to improve large language models at inference time.

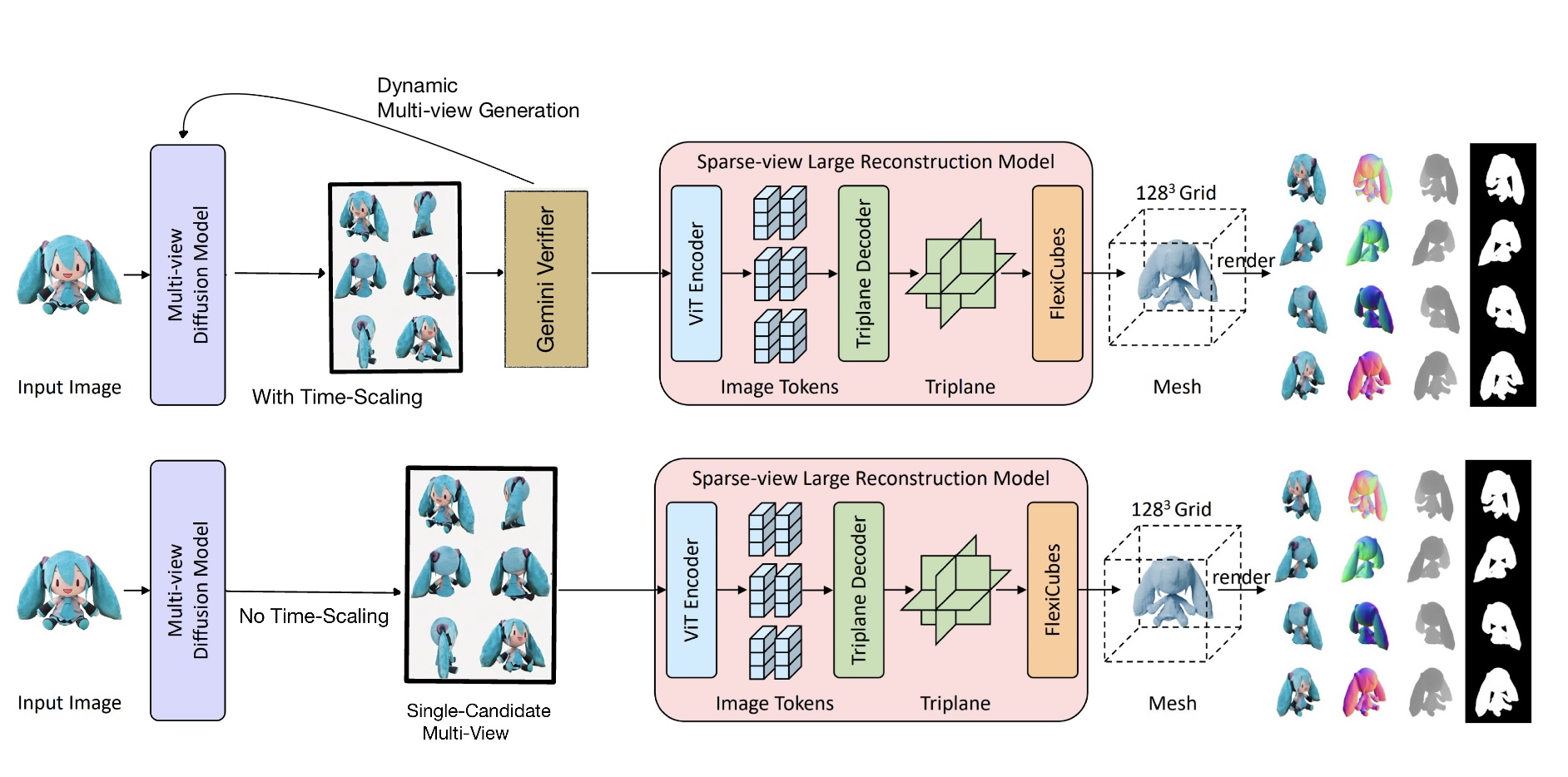

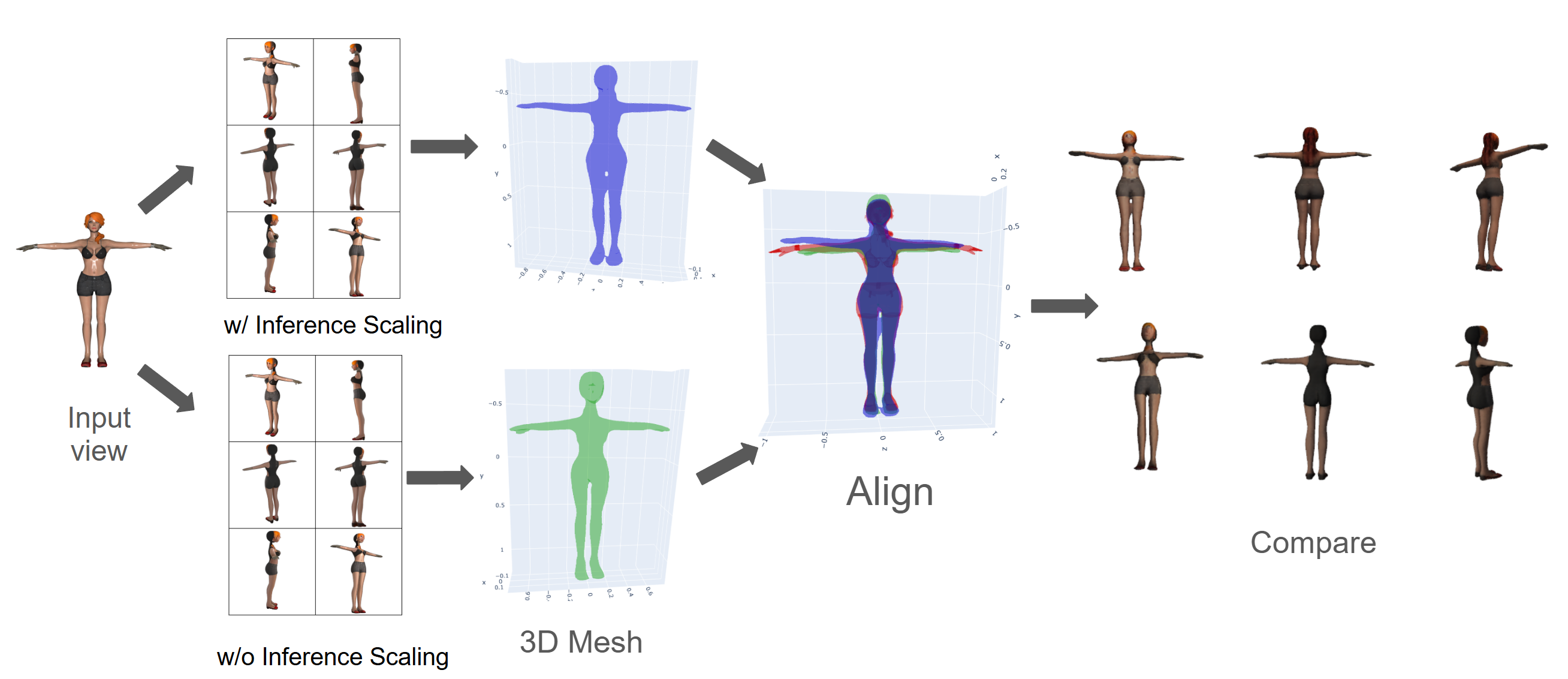

We proposed an Adaptive Best-of-N sampling strategy that dynamically selects the best output from multiple diffusion attempts using a multimodal LLM grader. This grader— implemented using Gemini—scores candidate multiview outputs based on consistency with the input image, aesthetic quality, and internal coherence across views. Our algorithm allocates more compute to harder cases (where outputs initially score poorly), while short-circuiting early when high-quality results are detected.

We evaluated our approach on 145 objects sampled from the Objaverse-XL dataset, comparing the original InstantMesh model with our scaled version. Our inference-time scaled model achieved significant improvements across all 2D perceptual metrics (PSNR, SSIM, LPIPS, and F-Score), producing cleaner, more consistent reconstructions. Notably, these improvements were achieved without modifying or retraining the diffusion backbone—highlighting inference-time scaling as a compute-efficient way to boost quality in 3D generative pipelines.